Website classification

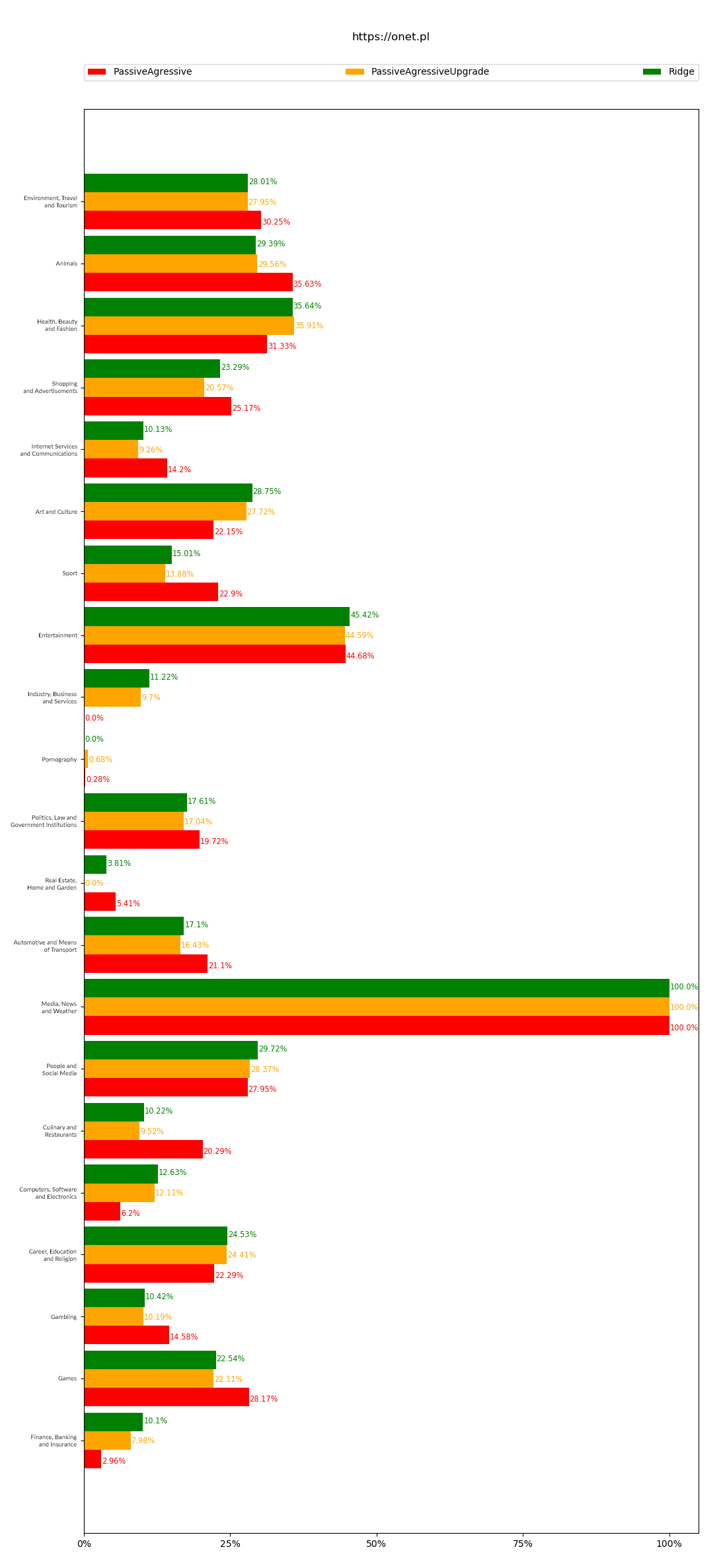

A compilation of the results of the two Ridge algorithms, and two versions of the Passive Aggressive algorithm, whose performance has been confirmed experimentally.

The results of the two Ridge algorithms, and two versions of the Passive Aggressive algorithm, whose performance has been confirmed experimentally

The listed algorithms return the best results when comparing them with other available classifiers. The algorithms are configurable, which means that we can increase their effectiveness, but this has to do with longer classification times.

The purpose of implementing the Ridge and Passive Aggressive algorithms, along with their comparison, is to visualize the classification problem with an indication of problem websites for which the algorithms did not return the same results. This allows for the continuous expansion of category databases with more keywords using artificial intelligence and detailed security analysis.

Website classification – algorithms for classifying websites

There are many classification algorithms based on probability calculus. Each algorithm approaches this issue differently, which means that a given website may return different categories for different algorithms. The result of the algorithms is based on the input data, which are the words found on your website. The biggest difficulty with this type of issue is the huge variety of websites and the arbitrariness of web developers in creating them. The algorithm works reliably in a situation where the website has a lot of text that is contained in a specific area. In a situation where the website being analyzed has little text, in addition to being contained in multiple categories, the algorithm may misclassify the website. In order to maximize the chances of the classifier working correctly, functionality was implemented, such as going through sub-pages when there is not enough text for the main page. Functionality was also implemented for returning the three most likely categories instead of one.

Website results

The classifier analyzes URLs based on text for different algorithms, it is worthwhile to visualize the results by creating a graph to easily illustrate them. It is also important to achieve the shortest possible classification time. For commercial solutions, it is crucial to find a balance between the running time of the algorithm and its efficiency. For the purpose of the test, a modified Passive Aggressive algorithm was implemented (marked in yellow in the chart), which in practice achieves slightly better results than the unmodified algorithm, but takes more than five times longer to classify a page.

For example:

- Classification time for a page containing 1008 words

- Ridge Algorithm = 0.1589s

- Passive Aggressive Algorithm = 0.1709s

- Passive Aggressive Algorithm Upgraded = 1.0734s

From a commercial point of view and working with hundreds of thousands of websites, any reduction, even the smallest reduction in classification time, positively affects the functioning of your business on a monthly or quarterly basis. A great advantage is the configurability of parameters. Their setting is determined by the available computing power. With its increase, not only the number of classified pages can improve, but also the efficiency of classification. This is also an opportunity for even more effective management, which has a real impact on the security of a website on the Internet.

The Passive Aggressive and Ridge algorithms for website classification are ssl-certified and protect against accidental data leakage.